Shingled Magnetic recording (SMR)

The Seagate Archive HDD has a record breaking data density of 1.33TB per platter (spinning disk). This is a huge increase from the previous record of 1 TB per platter. At the same time this also means the 8 TB model houses no less than 6 platters. Which is an impressive number. Going higher is currently only possible with more expensive helium filled hard disk drives.

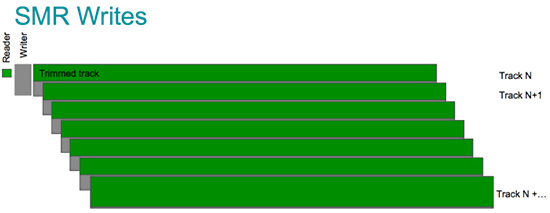

To achieve such a high platter density Seagate has used a new technology that it has branded Shingled Magnetic Recording, or SMR. Instead of making the data tracks smaller the SMR technology makes data tracks overlap, much like roof shingles on a house. You might assume this creates a problem, however because the reader of an HDD is much smaller than a writer the data can still be read from the top of the trimmed track. Without compromising the data! This also means SMR is basically re-using existing technology in a clever way. Which is always good sign for reliability and availability of the product.

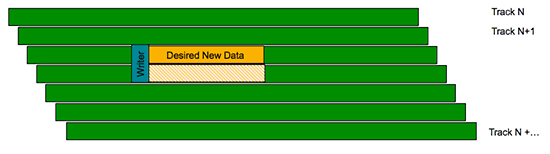

However SMR has a downside. Because writers are much wider than readers it is impossible to write new data without affecting the data on the neighboring overlapping tracks.

Therefore, much like an SSD, before each write the original data from the neighboring tracks is requested and placed back together with the new data. This means random write performance will be significantly reduced once there is some data on the neighboring tracks. Our benchmarks will determine if this is really an issue in day to day usage.

Putting SMR to the test

The Archive HDD is the first SMR drive on the market. We already know the drive should be power efficient and reliable. However Seagate suggests it’s used for cold storage. So how much does SMR hurt performance? Because this is the first SMR drive out there I will be doing some additional test to try and find the limits of the technology. If you are not interested in this then feel free to skip to the next page for the regular benchmarks.

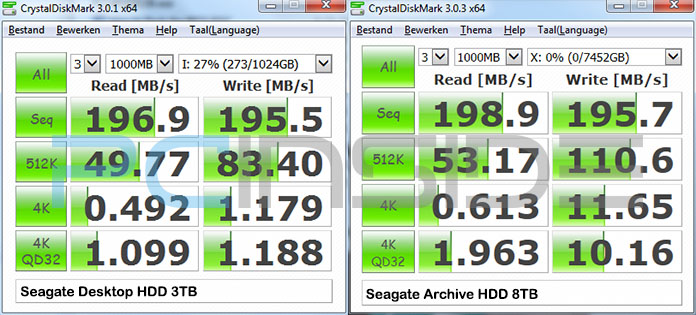

First let’s see how the Seagate Archive HDD handles itself in a direct comparison with its Desktop brother.

These numbers are actually quite impressive. The Seagate Archive HDD is outperforming the Seagate Desktop HDD 3 TB in a lot of areas. Surprisingly the smaller files show large gains. It is clear that Seagate has implemented a large write cache and is making good use of it. Even the SSHD hybrid drives do not reach over 2 MB/s on 4KQD32 random read/writes.

The 33% rumor

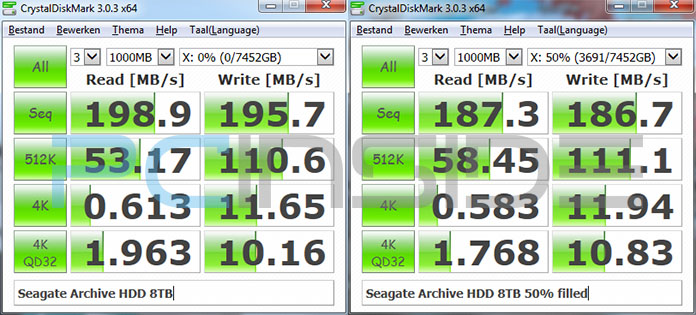

There were some reports on Reddit that after the drive was filled 33% it would become significantly slower. The school of thought behind this myth is that at first the drive does not use SMR and only when the drive is full, it would switch to an SMR mode. To check this myth I have tested the drive both empty and 50% filled.

As expected performance goes down like any other hard dive, the outer ring has a higher rotational speed. But the drop is hardly significant. Myth busted :).

The 20 GB cache

Another rumor is that the drive has a 20 GB cache and when it is full the drive becomes extremely slow. To check that I copied over 321 GB of video files from a remote 1000 Gbit location and did a new benchmark immediately after that operation.

The first thing I noticed was that, even though the 321 GB transfer took an hour, the performance never dropped below 110 MB/s. Which is the maximum performance of a typical Gigabit LAN. This is good news for anyone thinking of using this drive in a NAS environment.

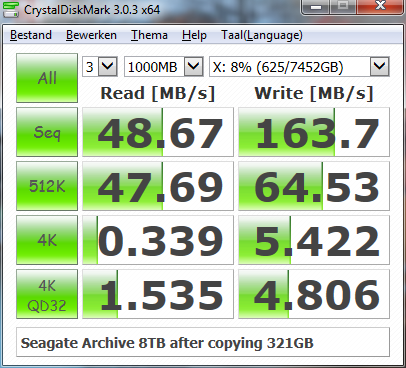

Immediately after the copy operation was complete I ordered a new crystal disk test:

As you can see the performance has dropped significantly after copying 321 GB. Apparently there is indeed some sort of additional buffer. Because the 321 GB copy operation itself was really stable the drive seems to be dealing with the buffer now that the operation is complete. Hard drives are really bad at doing two things at the same time so that explains the sudden drop in read speeds.

So yes; this drive is equipped with a buffer mechanism and it is possible to experience lower performance after prolonged write operations. Nothing too dramatic though.

Creating a “panic” state

The technology in the Archive HDD is completely drive managed. That means the disk itself will determine when it is doing its background tasks. This can explain the results we say earlier; there was no performance drop during a large file transfer. However when we stopped doing that the disks probably thought it was a good idea to clean that buffer. At that point true sequential operations are no longer possible and performance will go down. This also mean we can try to cause this scenario and then check how bad it can get.

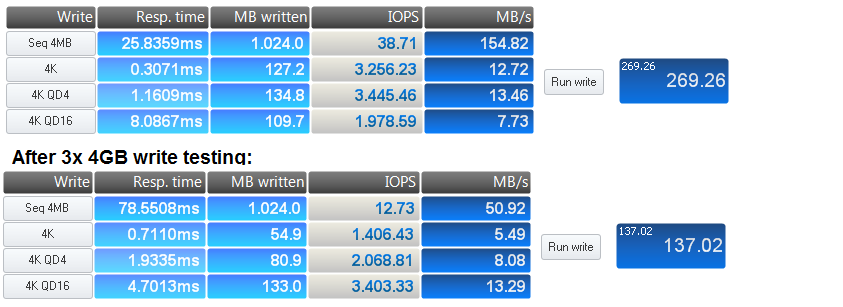

My first attempt was throwing a lot of writes at the disk. I used Anvil’s Storage utilities and executed the Write test 3 times in a row with 4 GB of data. I threw away these (good) results and instead did the regular test of 1 GB to compare the results with the “idle” state:

As you can see the performance on the sequential writes goes down significantly. The 4K performance also drops but it is still superior to any other hard disk drive I have ever tested. In fact the drive is coping very well with this scenario. So apparently a lot of writes will not really break sequential write performance. The 128MB ram cache probably takes care of that. This means tasks unintended for this drive like video surveillance recording certainly aren’t impossible.

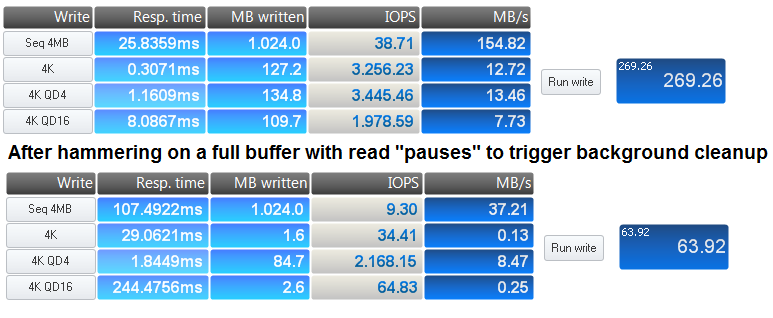

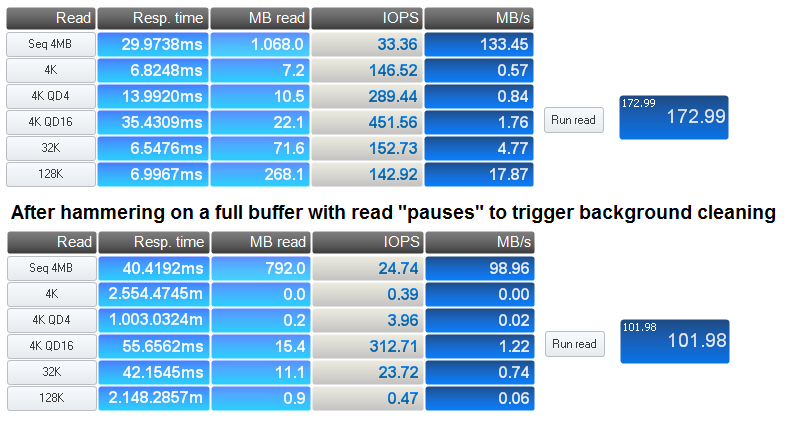

Having failed to really “break” SMR it was time to try something else. I continued directly from my writing test, hereby ensuring that the 20 GB buffer was pretty much full. Now I did an alternating test. Meaning that that a file is written, 6 read sub-tests are executed and thereafter 4 write sub-tests are executed. After that I ordered a another test that did exactly the same thing. With this test I am “hoping” that the reading phase makes the cleaning mechanism kick in, thereby interfering with the results of the second and final test.

As intended, this is where the write performance completely breaks down. The sequential and random writing speeds are awful. This time the random read speeds are also greatly affected:

Although the sequential read speeds are still good enough the random read performance is completely absent.

So how long does this last? Well it does not last very long because after a 15 second pause I did another run and values were already restoring. Random reads were almost back to their regular levels of 100-300 IOPS. Sequential writes had restored to 57 MB/s and random writes were back to 13, 9 and 5 MB/s.

What I am basically showing here is that you can create a pileup that lowers the performance. However that said achieving that result requires some extreme usage patterns. The only benchmark that allowed me to do this is called “Anvil” because you literally have to hammer the drive. Also the time spend in this “degraded” state of performance seems to be really short (e.g. seconds not minutes).

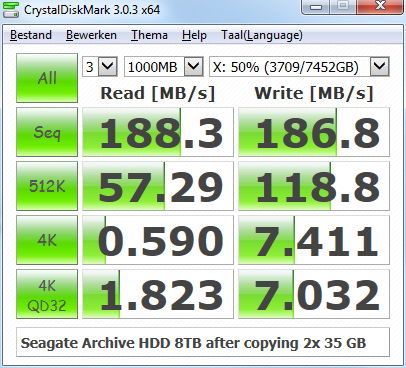

As a final check I decided to test a more realistic scenario and subsequently copy 2x 35 GB to the 50% filled drive and do a re-test. Which I believe is a much more common scenario for day to day usage. But still a scenario this drive should be bad at due to the technology used.

As you can see the 70 GB disk-to-disk transfer did not put a real burden on the drive. Drops in sustained performance really seem to be a non-issue for typical usage.

Analyizing the SMR results

We have seen that you can break performance, but you have to do some weird stuff in order to get there. Therefore the real question we should ask ourselves is how likely is that scenario in real-world applications.

How often are you continuously bursting your drive with random writes and alternating that with something that requires decent random read performance? For a regular user this scenario is highly unlikely to ever take place. The only exception here is rebuilding a ZFS raid or raid5/6 array. Making these drives unsuited for such raid applications. So the “extreme” panic state should be a non-issue as long as you keep that in mind.

But what about filling the buffer with large write operations? How often are you throwing more than 20 GB on your hard disk drive? For me personally that will not happen often. Individual video files are usually no larger than 8GB. Still, it might happen when you put machine backups on it (100GB+ each). However, these should be scheduled nightly. So in general this reduced performance state is unlikely to ever bother me. But it could happen to you.

So let’s discuss the impact of a filled buffer. Are we in trouble? If we are talking NAS usage then you end up with the temporary 48 MB/s sequential read speed we measured earlier with CrystalDisk. That still allows for 384 Mbit of network throughput. The highest quality blue rays are about 35 Mbit so for single streaming client this will be a non-issue. This ofcourse assumes you are defragmenting your drive as you should so all data can be read sequentially.

Is SMR bad?

So given all the test I did do I think SMR is bad? Well if you use this drive as intended, cold storage, then SMR will most likely never bother you. . In fact, as we will see on the next page, it will help most users achieve greater (random) write speeds when doing consumer grade tasks. Even in a more traditional desktop setup it is still highly unlikely you will notice the downsides of SMR. However, if you start rebuilding raid arrays then you are in for a wait. I have heard reports of rebuilds taking up to 57 hours(!).

To summarize our findings I estimate that for 99% of the users out there SMR drive with it’s 20 GB cache will not hurt their performance. Just don’t put it in raid 5/6 or zfs without a sequential silvering algorithm (e.g. no OpenZFS).

Comments: